Hi👋,I am Jue Wang, an MS student at Southern University of Science and Technology and Shenzhen Institute of Advanced Technology (SIAT), CAS

and Shenzhen Institute of Advanced Technology (SIAT), CAS ![]() .

.

🔥🔥🔥I am looking for a Ph.D. position.Here is my CV.If you would like to discuss potential opportunities or learn more about my qualifications, please feel free to contact me jue.wang.info@gmail.com.

My research interest mainly includes:

- Multi-modal LLM: LLM Reasoning, LLM Application.

- Diffusion model: High Quality and Stable Image Generation.

- Nerf/3D GS: 3D Scene Reconstruction.

- Multispectral Detection: Multispectral/Hyperspectral Imaging and Detection.

📖 Educations

- 2022.08 - now, Southern University of Science and Technology, Master in Electronic and Information Engineering.

- 2018.08 - 2022.06, Northeastern University, Bachelor in Information and Computing Science.

🔥 News

- 2024.07: Our paper about invisible gas detection is accepted by CVIU (JCR Q1, CCF rank B). 🎉.

- 2024.06: We propose a new task and benchmark about understanding emotional triggers. Checkout the EmCoBench.

- 2024.03: We published a paper about RGB-T gas detection. Checkout the Invisable Gas Detection.

📝 Publications

Working in Progress: CVIU (1: Accepted); AAAI (2: Under Review);

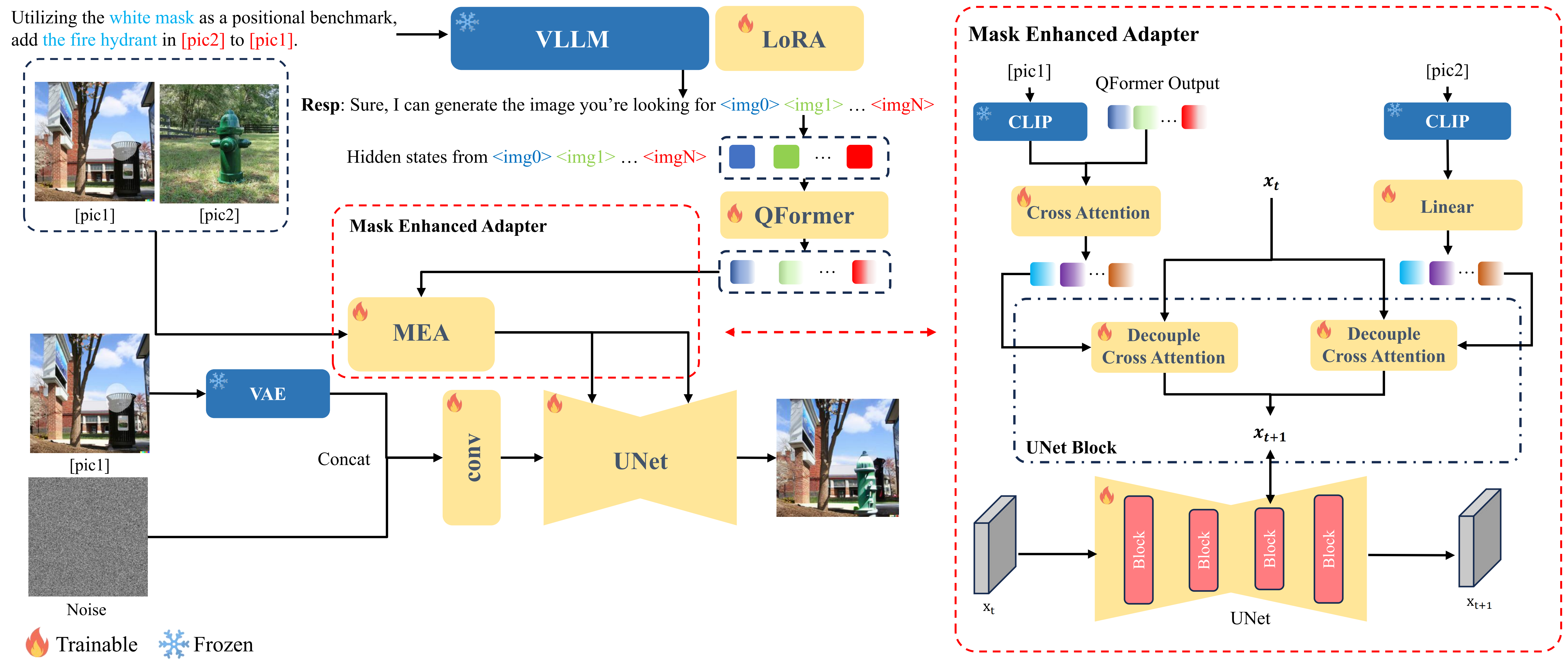

FlexEdit: Marrying Free-Shape Masks to VLLM for Flexible Image Editing

Jue Wang*, Yuxiang Lin*, Tianshuo Yuan, Zhi-Qi Cheng, Xiaolong Wang, Jiao GH, Wei Chen, Xiaojiang Peng (* denotes equal contribution)

Arxiv | [Paper]

- We propose FlexEdit, an end-to-end image editing method that combines free-shape masks and language instructions, overcoming the limitations of traditional methods that require precise mask drawing.

- We introduce the Mask Enhanced Adapter (MEA) structure that seamlessly enhance the mask embedding of the Vision Large Language Model (VLLM) with image data, enhancing the model’s ability to understand and execute complex editing tasks.

- We create the Free Shape Mask Instruction Edit (FSIM-Edit) benchmark, which includes a comprehensive dataset with diverse scenarios and editing instructions, to rigorously evaluate the performance of image editing models under free-shape mask conditions.

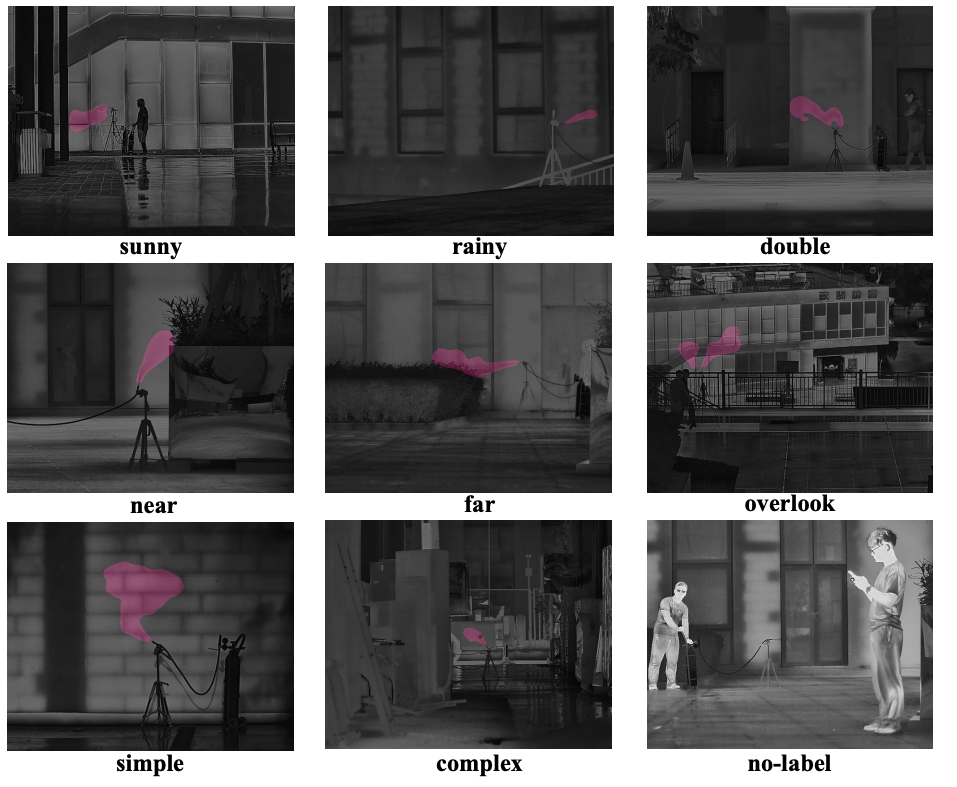

Invisible Gas Detection: An RGB-Thermal Cross Attention Network and A New Benchmark

Jue Wang*, Yuxiang Lin*, Qi Zhao, Dong Luo, Shuaibao Chen, Wei Chen, Xiaojiang Peng (* denotes equal contribution)

CVIU(JCR Q1, CCF-B) | [Paper][Code]

- We design the RGB-Thermal Cross Attention Network for invisible gas detection, by effectively integrating texture information from RGB images and gas information from thermal images.

- We propose the RGB-assisted Cross Attention Module and the Global Textual Attention Module for cross modality feature fusion and diverse contextual information extraction.

- We introduce Gas-DB, the first comprehensive open-source gas detection database, including approximately 1.3K well-annotated RGB-thermal images for gas detection algorithms.

💻 Experience

🎖 Honors and Awards

- 2024.01 Outstanding Graduate Student of Southern University of Science and Technology for the Academic Year 2022-2023.

- 2023.03 Winner Award in the 18th “Challenge Cup” SUSTech Inramural Competition.